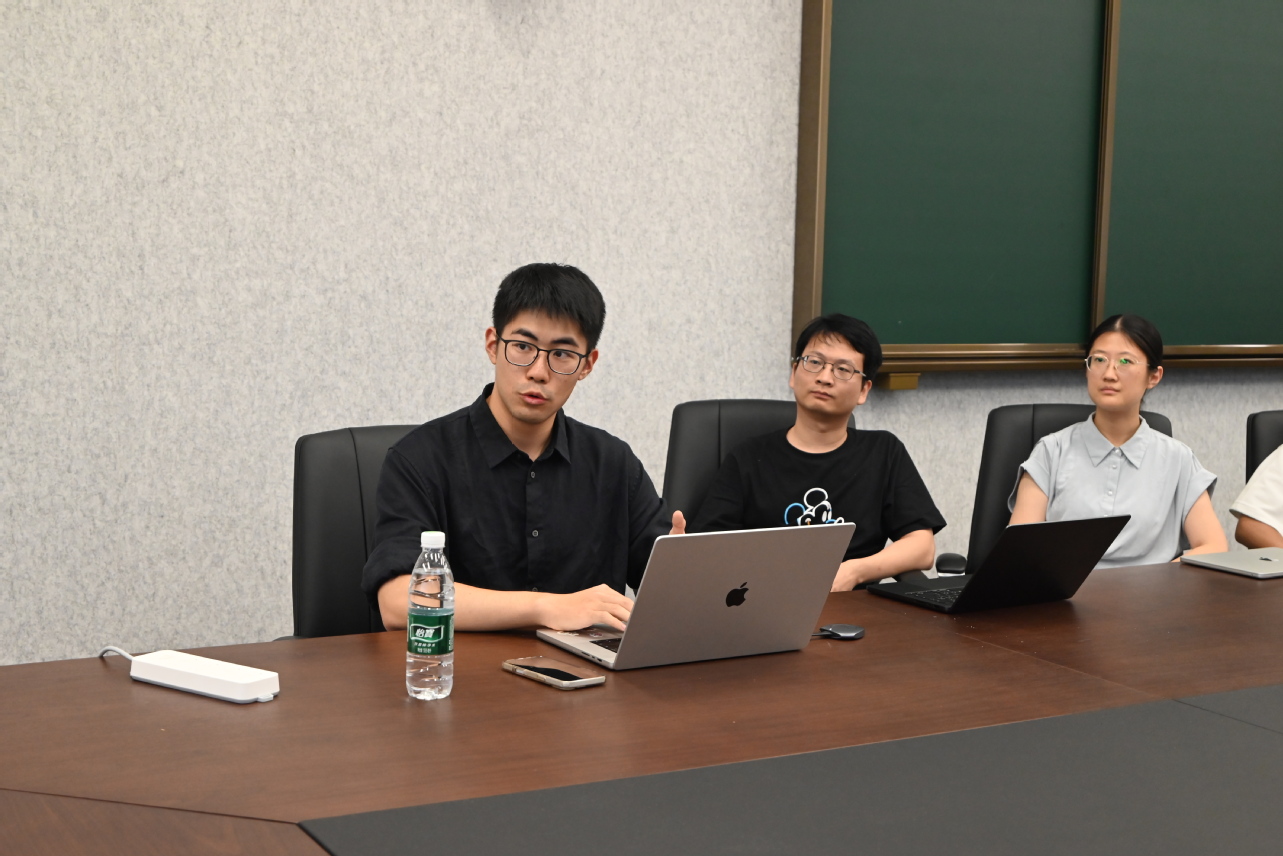

At 14:00 on the afternoon of June 20, 2025, Dr. Liu Xian'an, a research scientist at NVIDIA, was invited by Assistant Professor Si Chenyang of our institute to give an academic report titled “Towards Multi-Modal Visual Generation: From Human Modeling to World Foundation Models.”

Abstract:

Multimodal visual generation is gaining increasing attention in both research and application. This report will elaborate on the development of multimodal visual generation from the perspective of digital human generation to world models, and discuss how to improve the quality of digital human generation within the framework of foundation models, as well as how to enhance the capabilities of foundation models based on prior knowledge in specific fields.

Presenter's Biography:

Dr. Liu Xian'an is a Research Scientist at NVIDIA Research. He obtained his Ph.D. from the Chinese University of Hong Kong, where he was advised by Professor Lin Dahua and Professor Liu Ziwei. His research interests lie in computer vision and generative models, with a focus on pre-training and post-training of foundation models for generative artificial intelligence, as well as their applications in human modeling and physical intelligence. He has published over 30 papers at top international conferences such as CVPR, NeurIPS, and ICLR, among which he is the first author of 9 papers, and his work has garnered over 1,400 citations on Google Scholar. As a core contributor to the NVIDIA Cosmos series of world models, he has extensive research experience in areas such as image and video generation foundation models (Cosmos Predict), image and video tokenizers (Cosmos Tokenizer), and multimodal controllable generation (Cosmos Transfer).