Work 1: Inverse Rendering Based on Spatially-Varying 3D Gaussians

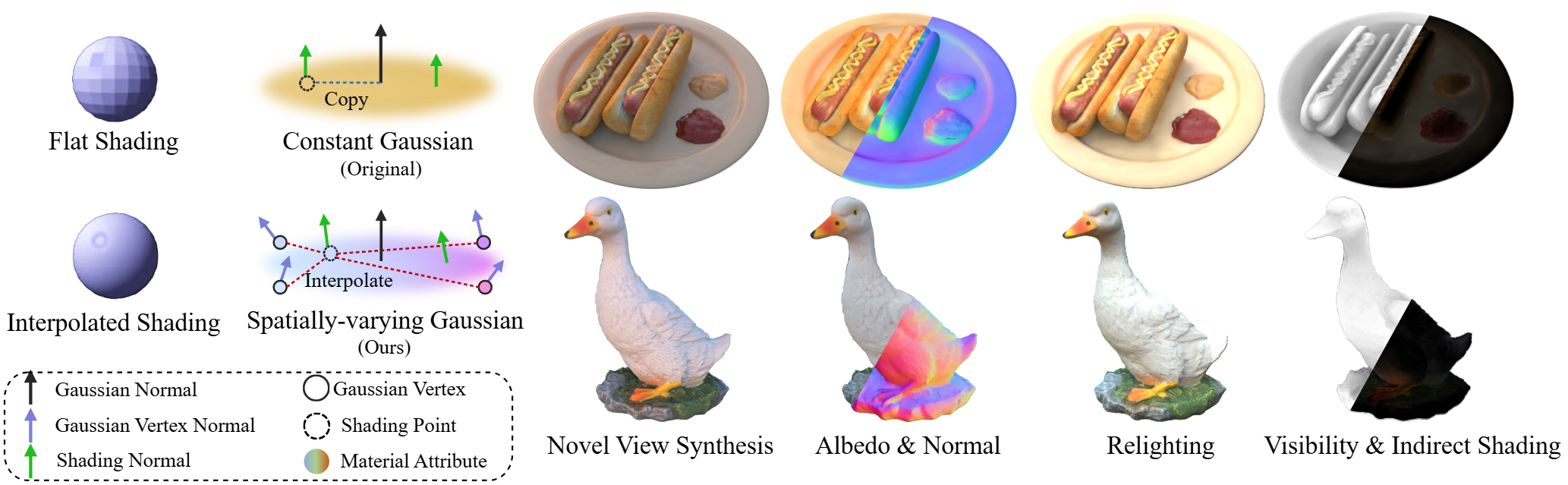

Reconstructing object geometry and material properties remains a long-standing challenge in computer graphics and computer vision. Thanks to their efficient rendering capabilities, 3D Gaussians have recently been widely adopted for novel view synthesis (NVS) and inverse rendering. However, traditional 3D Gaussians assume constant material parameters and surface normals within each Gaussian, limiting their expressiveness and leading to suboptimal quality in both view synthesis and relighting.

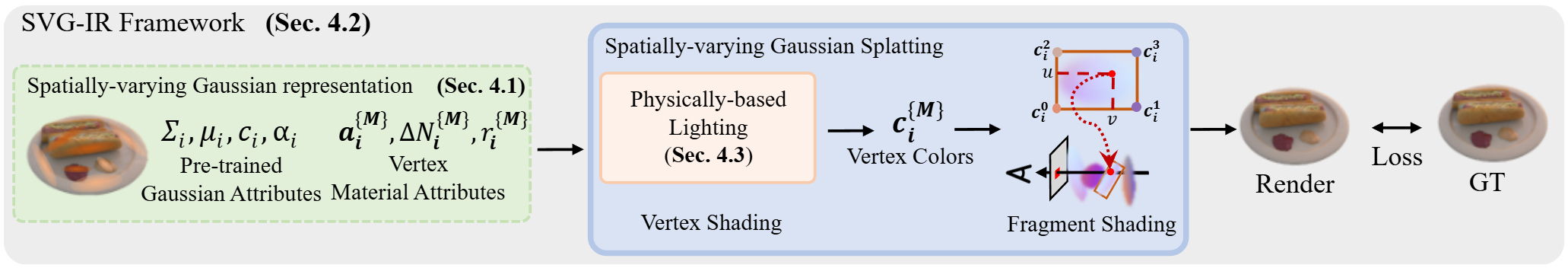

To address this issue, Professor Beibei Wang’s team at the School of Intelligence Science and Technology, Nanjing University, introduces a novel representation called Spatially-Varying Gaussian (SVG), where each Gaussian is endowed with spatially-varying reflectance and normal information. Building upon this representation, they propose SVG-IR, a spatially-varying Gaussian inverse rendering framework. SVG-IR incorporates a physically-based indirect illumination model to achieve more natural and realistic relighting results.

Experimental evaluations demonstrate that SVG-IR significantly improves relighting quality, outperforming state-of-the-art neural radiance field methods by 2.5 dB and existing Gaussian-based approaches by 3.5 dB, while maintaining real-time rendering speed. This work has been accepted for publication at CVPR 2025.

Figure 1. Inverse Rendering Framework Based on Spatially-Varying Gaussians

Figure 2. Illustration of Spatially-Varying Gaussians and Inverse Rendering Results

Link:https://learner-shx.github.io/project_pages/SVG-IR/index

Work 2: Relightable Neural Gaussians

3D Gaussian Splatting has emerged as a popular representation in computer graphics and 3D vision, known for its expressive power and efficient rendering speed. However, constructing relightable objects based on Gaussian splatting remains challenging and typically relies on geometric and material constraints. These constraints often break down when dealing with objects such as plush toys or fabrics that lack clear surface boundaries, making the disentanglement of geometry, material, and illumination significantly more difficult.

To address this issue, Professor Beibei Wang’s team from the School of Intelligence Science and Technology at Nanjing University, in collaboration with Adobe, proposes a novel Relightable Neural Gaussian representation. This approach abandons the assumption of an analytical material model and instead introduces a neural relightable material representation, which generalizes well to both surface-based and complex non-surface-based objects.

Furthermore, due to limitations in geometric expressiveness, traditional Gaussian-based methods often suffer from shadow artifacts. To overcome this, the proposed method introduces a shadow-aware guidance network that leverages a depth-aware refinement module to accurately estimate visibility at any point on the object, significantly enhancing the realism of shadows.

Extensive experiments across multiple datasets demonstrate that this method achieves superior quality in relighting tasks compared to existing Gaussian-based techniques. This work has been accepted for publication at CVPR 2025.

Figure 3. Relighting Results

Link:https://whois-jiahui.fun/project_pages/RNG